Image Server AI — GPU Inference in 55 Seconds

5 min read

Oct 22, 2025

Pensive robot by Image Server AI

TL;DR

This post shares what I learned over three days of experimenting with my DGX Spark while building a text-to-image inference app. See the repo here.

Getting Started

It's been five days since I got my DGX Spark in the mail. It showed up on Friday, but I waited until Sunday to start experimenting.

When I first watched Jensen Huang's talk on it, I knew then it would be a great idea for anyone working in Machine Learning to own one. I primarily do MLOps, but my background in software engineering often pulls me into building ML applications too. I was also curious about diving deeper into machine learning concepts — and working with the DGX Spark felt like the perfect way to start.

Why Buy

Well, I like being first! My reservation number was #14127, but I saw people in the forums with six-digit numbers, so I was still early. I was curious about the workflow. I wanted to run models directly from my desktop, develop locally, and then move everything to the cloud. The DGX Spark lets me do exactly that.

And honestly — I've only been using it for three days, so I'm still just scratching the surface.

Learning

Inference on a single Blackwell GPU is about 32x faster than on a 126 GB unified memory CPU.

Simply put: what took 30 minutes on CPU took just 55 seconds on GPU. That's a massive boost — and it makes sense why everyone's so hyped about GPUs.

Did I need to buy the DGX Spark to know that? Probably not. I'd seen similar gains back when I turned on GPU usage for training models at Pluralsight. Tasks that used to take four hours dropped to under 30 minutes.

But the gotcha this time was that the hardware is so new, standard libraries like PyTorch haven't officially released versions compatible with the CUDA 13.0 Toolkit yet.

Fortunately, NVIDIA publishes its own version of PyTorch, and they maintain a Docker image packed with the right dependencies for my setup. That image saved me.

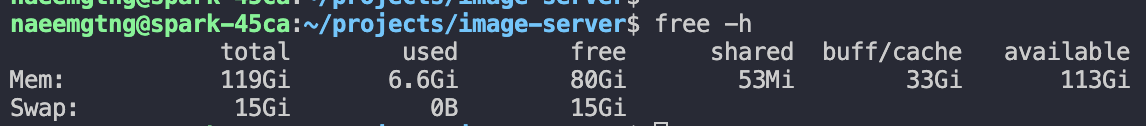

Memory Management

Free up your memory!

When the application first starts, memory usage sits around 11-13GB. But when a request kicks off inference, I watched it spike to 116GB. After inference, it stays near the peak — unless you explicitly clean up.

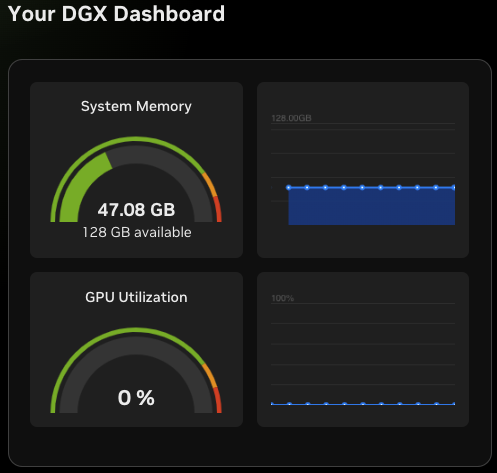

Triggering garbage collection after sending the response drops memory usage back to themid-40GB range. The remaining ~30GB is likely tied to the loaded model, which the OS keeps cached in memory.

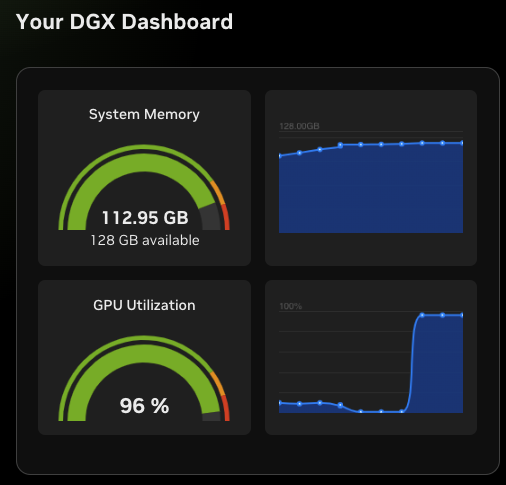

Being able to monitor memory in real time is a huge advantage when developing AI applications. In the absence of the DGX Spark, there is tooling in both Linux and Python to help with this. But this makes it easy. The screenshot below shows the DGX Dashboard during inference.

Dashboad spiked GPU, high CPU

You can't see it here, but CPU utilization rose gradually — while GPU utilization surged almost instantly. It's doing exactly what it should.

High memory usage during inference

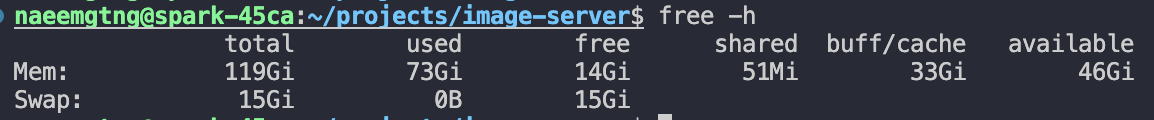

After clearing our pipeline and garbage collection, we see a huge change in the available memory, used memory and free memory:

Dashboard showing low memory usage

Terminal view low memory usage

In case you're wondering, clearing up memory at this stage does not affect how fast the model makes inference on subsequent requests. In fact, times are consistent across requests whether I cleared the memory or not. Still, I think it's best practice to free up memory as you can do so safely.

<edit>

Load Testing and Caching

While iterating on this project, I decided to dig deeper into memory behavior and see how caching my model was truly affecting the application. I started adding some quick benchmarks and simple log statements to understand what advantage I was losing by clearing the cache after every run. I also re-evaluated my approach. Initially, I was sending one request at a time. This time, I disabled cleanup and sent multiple requests to simulate a real server environment — something closer to what you'd expect in production. Think of it like basic load testing.

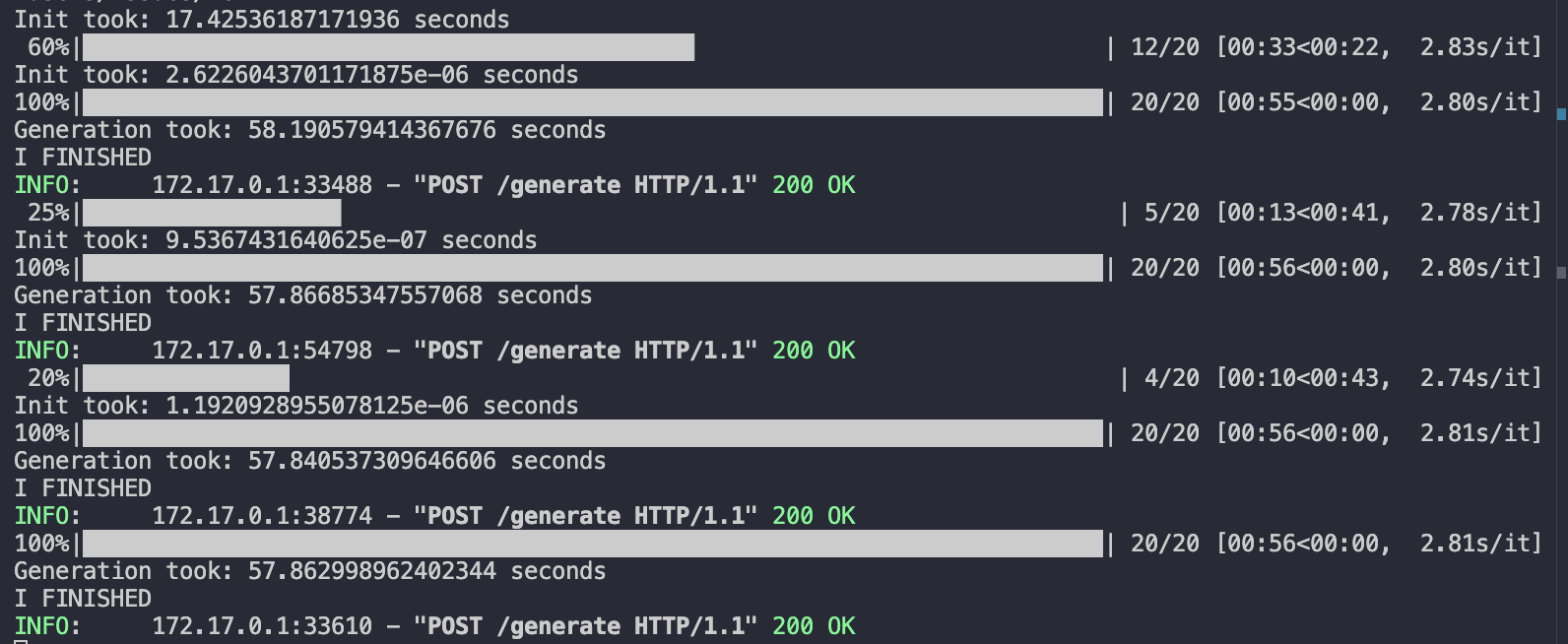

After a few requests, the results spoke for themselves.

Running Load Test

First, I commented my drop_cache() function call. I didn't use any particular framework, I just opened a few terminal windows and sent the same request one immediately after the other. See the results below.

Benchmarking results with cached model

The first line Init took: 17.4253... seconds shows that after startup, the initiall run took nearly 18s. Subsequent runs , with the pipeline cached, took between 1 and 10 seconds. That's a win. It may seem minor, but in a large-scale application handling millions of request per second, not caching the inference pipeline would cost serious time and compute. Inference consistently took between 55 (best case) and 59s.

Out of curiosity, I re-enabled the Drop_cache()function and sent multiple request again. Short asnwer: I crashed not only the server but the computer that was serving the application. This not only points to the benefits of how chaching the pipeline helps, it also points to the need for possibly locking the pipeline initialization along with the inference to prevent any possible race condition.

Why It Works

Without caching, I have an instant performance killer.

- For your 57s inference, adding 17s overhead = 30% slower

- Over 100 requests: 1700s wasted just reloading

With caching, I have some solid wins.

- Keeps weights in memory — avoiding the 17s reload

- Avoids overhead, ~16s saved per request

</edit>

The Setup

When I first started working on the Image Server, I followed my usual Python workflow — using tools like uv and .venv. But that approach wasn't giving me what I needed.

I ran into this weird issue where, because the app was using a venv inside a container, it couldn't access the system-level CUDA dependencies. Basically, it was trapped inside a virtual environment inside another virtual environment.

After a lot of trial and error, I started digging through the NVIDIA forums. I found a post that described exactly what I was running into. I left a comment, and a moderator plus an NVIDIA engineer pointed me to the company's proprietary PyTorch Docker image — the one with full CUDA 13.0 support built in.

You can see that thread here.

Once I realized the venv setup was blocking me, I scrapped it. I started developing directly inside the container, did a quick pip install for my dependencies, and — voilà — I was using the GPU.

Wrapping Up

Three days in, and I've already learned a ton, more that I can share in this one post. There's still more to explore — but the DGX Spark has been a powerful hands-on way to push deeper into GPU workflows and text-to-image inference.

As always, thanks for reading and look out for the follow-up!